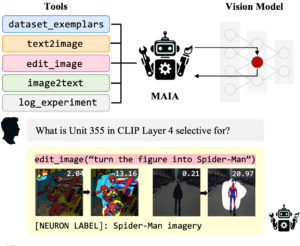

A Multimodal Automated Interpretability Agent

T. Rott Shaham*, S. Schwettmann*, F. Wang, A. Rajaram, E. Hernandez, J. Andreas, A. Torralba. ICML 2024.

An agent that autonomously conducts experiments on other systems to explain their behavior, by composing interpretability subroutines into Python programs.

[Project page] [Code]

FIND: A Function Description Benchmark for Evaluating Interpretability Methods

S. Schwettmann*, T. Rott Shaham*, J. Materzynska, N. Chowdhury, S. Li, J. Andreas, D. Bau, A. Torralba. NeurIPS 2023.

An interactive dataset of functions resembling subcomputations inside trained neural networks, for validating and comparing open-ended labeling tools, and a new method that uses Automated Interpretability Agents to explain other systems.

[Project page] [Dataset] [Code] [News]

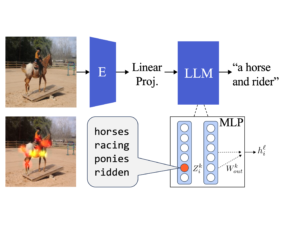

Multimodal Neurons in Pretrained Text-Only Transformers

S. Schwettmann*, N. Chowdhury*, A. Torralba ICCV CVCL Workshop 2023 (Oral).

We find multimodal neurons in a transformer pretrained only on language. When image representations are aligned to the language model, these neurons activate on specific image features and inject related text into the model’s next token prediction.

[Project Page]

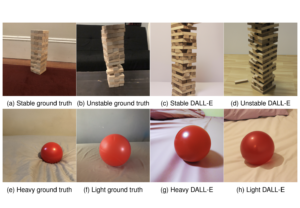

Intuitive Physics in Text-Conditional Image Generation

E. Kramer, S. Schwettmann*, P. Sinha*. CCN 2023.

We find that DALL-E’s generated images are more congruent with human intuitive physical judgments in the domain of optics (refraction and shadow), than in physical dynamics (stability and mass).

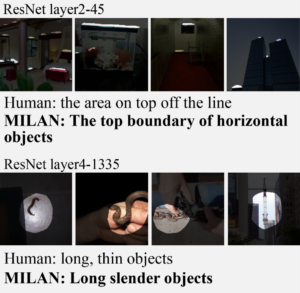

Natural Language Descriptions of Deep Features

E. Hernandez, S. Schwettmann, D. Bau, T. Bagashvili, A. Torralba, J. Andreas. ICLR 2022 (Oral).

We introduce a procedure that automatically labels neurons in deep networks with open-ended, compositional, natural language descriptions of their function.

[MIT News article]

[Project page] [Code and data]

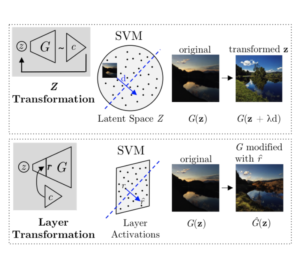

Toward a Visual Concept Vocabulary for GAN Latent Space

S. Schwettmann, E. Hernandez, D. Bau, S. Klein, J. Andreas, A. Torralba. ICCV 2021.

What is the overlap between the set of representations in a GAN, and the set of concepts meaningful to humans in visual scenes? We introduce a method for building an open-ended vocabulary of primitive visual concepts represented in a GAN’s latent space.

[Project Page] [Code and data]

Latent Compass: Creation by Navigation

S. Schwettmann, H. Strobelt, M. Martino. Machine Learning for Creativity and Design Workshop, NeurIPS 2020.

We present a technique for few-shot learning of interpretable image transformations. Users wordlessly identify dimensions of interest inside a GAN’s latent space, and steer images along them.

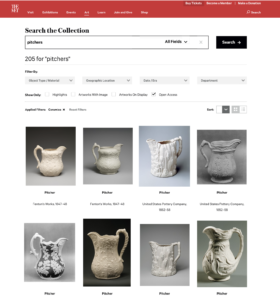

Gen Studio

[Met x Microsoft x MIT collaboration] 2019

Can we build deep generative models associated with archives of creative work:

> Where we can collaborate with the models to iterate archives forward?

> Where the models suggest a feature language underlying instances in a collection?

In this project with the Metropolitan Museum of Art, we build a model for exploring the spaces in between objects in the Met’s digital collection, and a user interface for exploring it. The project was exhibited in the Met Fifth Avenue in February 2019.

[Read an article by the Met about the project] [Read my article about the project]

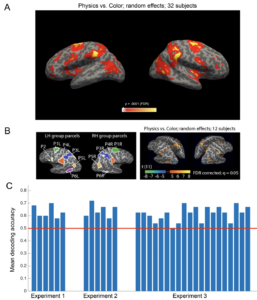

Invariant Representations of Mass in the Human Brain

S. Schwettmann, J. Tenenbaum, N. Kanwisher. eLife 2019.

We find the first evidence that the brain represents an object’s mass invariantly to the physical scenario revealing that mass. This supports an account of physical reasoning where abstract physical variables serve as inputs to a forward model of dynamics, akin to a physics engine, in the human brain.

[Dataset on OpenNeuro]

Project Echo: Legal template agreements for artist-AI collaborations

J. Fjeld, M. Kortz, S. Klein, S. Schwettmann 2019.

We introduce two legal templates for artist-AI collaborations: a short license for artists and a longer agreement for collaborations. The former is ideal for artists who want to license their work for use as input to a machine learning algorithms. The longer agreement is for cases where ouptut may be similar to the artist’s work, so it is treated as joint work. The accompanying how-to guide provides further detail.

Find the templates on [Github]

Read more at the [Cyberlaw clinic]

Nymity

S. Schwettmann, S. Klein, C. Nesson

The Nymity project is a collaboration with Harvard’s Berkman Klein Center for Internet & Society, that is building toward a future where digital citizens control the models of identity and reputation underlying their communal discourse spaces. We are developing tools that make explicit and configurable what is often an implicit feature of a shared space—the nymity it affords its users. [Threads tool] [Threads Github]

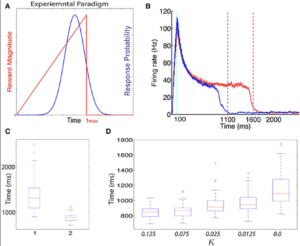

The Role of Multiple Neuromodulators in Reinforcement Learning That Is Based on Competition between Eligibility Traces

M. Huertas, S. Schwettmann, H. Shouval. Frontiers in Synaptic Neuroscience 2016.

We introduce a biologically plausible model of reinforcement learning at the synaptic level using eligibility traces, that addresses the role of different neuromodulators.

* indicates equal contribution.